Docs

Orchid GPU cluster

Details of JASMIN's GPU cluster, ORCHID

GPU cluster spec

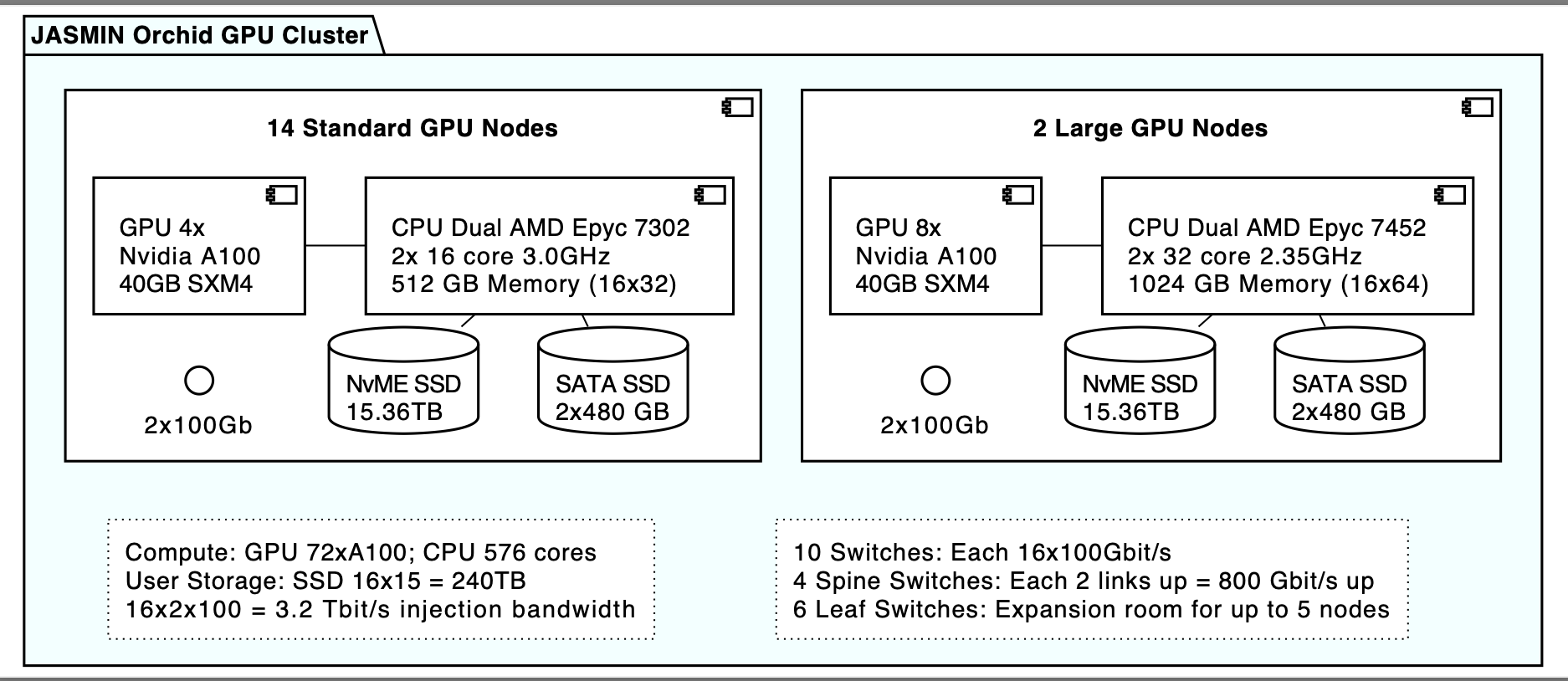

The JASMIN GPU cluster is composed of 16 GPU nodes:

- 14 x standard GPU nodes with 4 GPU Nvidia A100 GPU cards each

- 2 x large GPU nodes with 8 Nvidia A100 GPU cards

Note: the actual number of nodes may vary slightly over time due to operational reasons. You can check which nodes are available by checking the STATE column in sinfo --partition=orchid.

Request access to ORCHID

Before using ORCHID on JASMIN, you will need:

- An existing JASMIN account and valid

jasmin-loginaccess role: Apply here - Subsequently (once

jasmin-loginhas been approved and completed), theorchidaccess role: Apply here

The jasmin-login access role ensures that your account is set up with access to the LOTUS batch processing cluster, while the orchid role grants access to the special LOTUS partition and QoS used by ORCHID.

Holding the orchid role also gives access to the GPU interactive node.

Note: In the supporting info on the orchid request form, please provide details

on the software and the workflow that you will use/run on ORCHID.

Test a GPU job

Testing a job on the JASMIN ORCHID GPU cluster can be carried out in an

interactive mode by launching a pseudo-shell terminal Slurm job from a JASMIN

scientific server e.g. sci-vm-01:

srun --gres=gpu:1 --partition=orchid --account=orchid --qos=orchid --pty /bin/bash

srun: job 19505658 queued and waiting for resources

srun: job 19505658 has been allocated resourcesAt this point, your shell prompt will change to the GPU node gpuhost004, but with access to one GPU as shown by the NVIDIA utility. You will have the one GPU allocated at this shell, as requested:

nvidia-smi

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 570.133.20 Driver Version: 570.133.20 CUDA Version: 12.8 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA A100-SXM4-40GB On | 00000000:01:00.0 Off | 0 |

| ... | ... | ... |Note that for batch mode, a GPU job is submitted using the Slurm command

sbatch:

sbatch --gres=gpu:1 --partition=orchid --account=orchid --qos=orchid gpujobscript.sbatchor by adding the following preamble in the job script file

#SBATCH --partition=orchid

#SBATCH --account=orchid

#SBATCH --qos=orchid

#SBATCH --gres=gpu:1Notes:

-

gpuhost015andgpuhost016are the two largest nodes with 64 CPUs and 8 GPUs each. -

IMPORTANT CUDA Version: 12.8 Please add the following to your path

export PATH=/usr/local/cuda-12.8/bin${PATH:+:${PATH}} -

The following are the limits for the

orchidQoS. If, for example, the CPU limit is exceeded, then the job is expected to be in a pending state with the reason beingQOSGrpCpuLimit.QoS Priority Max wall time Max jobs per user orchid350 1 day 8 orchid48*350 2 days 8 * We provide this QoS (

orchid48) as an on-request basis. If your workflow needs to run on a GPU for 2 days, please contact the JASMIN helpdesk and justify the resource request. Access to this QoS is time-bound (maximum two months).

GPU interactive node outside Slurm

There is an interactive GPU node gpuhost001.jc.rl.ac.uk, not managed by Slurm, which has the same spec as

other ORCHID nodes. You can access it directly from the JASMIN login servers for prototyping and

testing code prior to running as a batch job on ORCHID:

Make sure that your initial SSH connection to the login server used the -A (agent forwarding) option, then:

ssh gpuhost001.jc.rl.ac.uk# now on gpu interactive nodeSoftware Installed on the GPU cluster

- CUDA version 13 (other versions will be available soon via the module environment)

- CUDA DNN (Deep Neural Network Library) version 13

- cuda-toolkit - version 13.1

- Singularity-CE version 4.3.7-1.el9 - supports NVIDIA/GPU containers

- podman version 5.6.0

Please note that the cluster may have newer software available. For example, you can check the current CUDA version by running nvcc --version (nvidia-smi also shows the CUDA version, see example above). You can also check the Singularity version with singularity --version.